Voice Changer

ECE5725 Fall2023 Project

By Wenyi Fu (wf223) and Kaiyuan Xu (kx74)

Demonstration Video

Introduction

The Voice Changer Project is an embedded system based on the Raspberry Pi 4, designed to capture, process, and transform real-time voice input into a variety of interesting sound effects, including Elf/Monster, Optimus Prime, Echo, and Hacker. The system integrates a USB microphone, a pair of speakers, and a PiTFT touchscreen as hardware components. It utilizes optimized libraries for audio processing, and provides user-friendly interfaces for immediate control.

Project Objective:

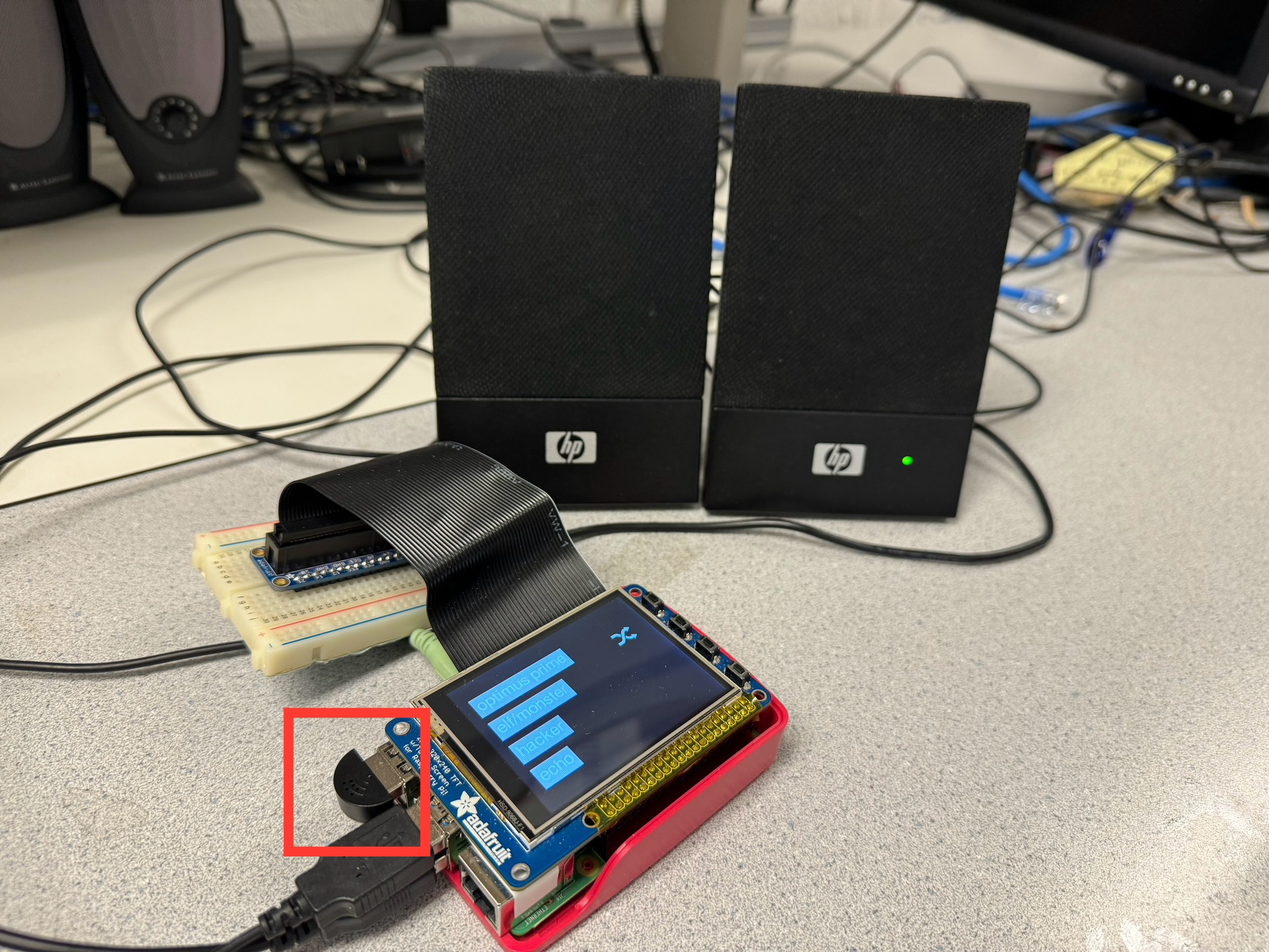

- Voice input from USB microphone (marked by the red box), output into the speaker.

- Implementation of Fast-Fourier Transformation to process the audio.

- Both time-domain and frequency-domain modification of voice.

- Interface of touch screen buttons, using FIFO to transmit data and display on PiTFT.

Design and Testing

Core Functionality and Implementation

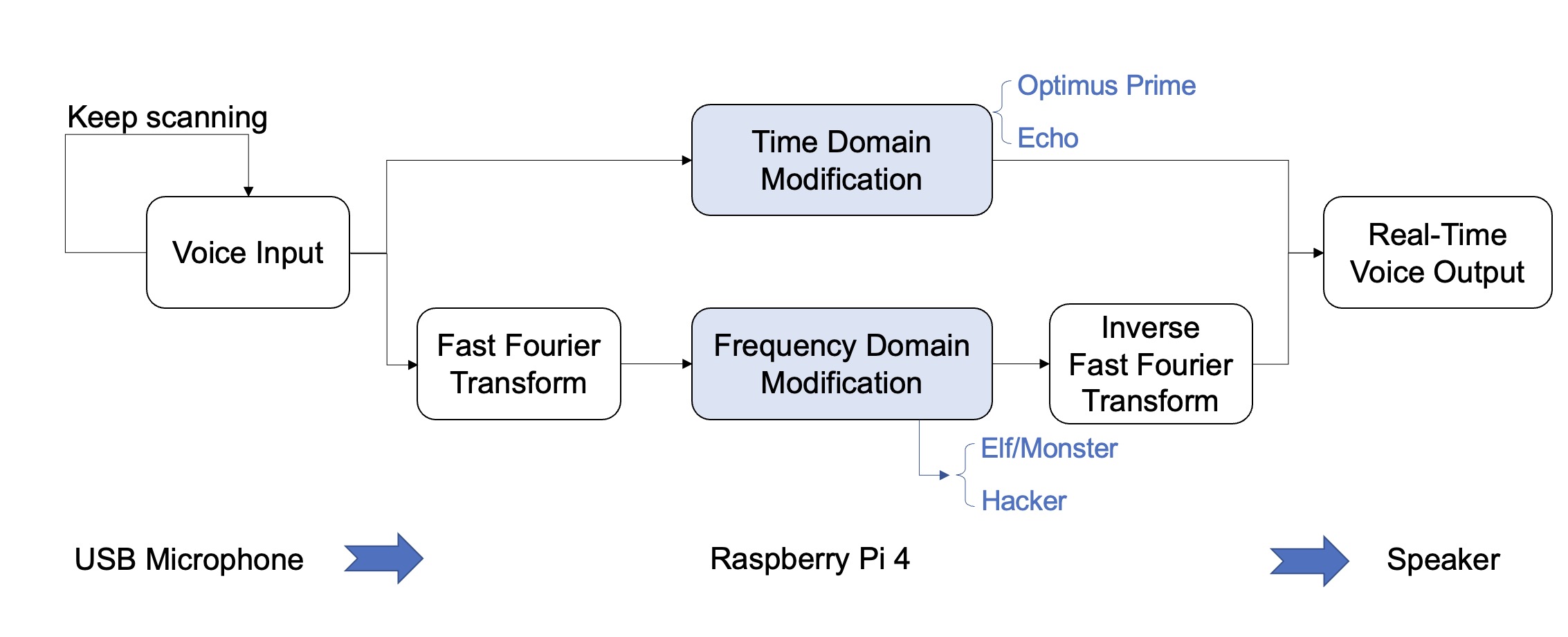

The idea is to use the Raspberry Pi to implement a real-time voice changer. By speaking into a microphone, we will capture the input data and process it to alter the pitch and timbre of the voice. The modified voice will be played through the speakers simultaneously (with necessarily a little lagging), allowing for an interactive voice transformation experience. The process schematic is shown in Figure 1.

Figure 1. Voice Changing Process Diagram

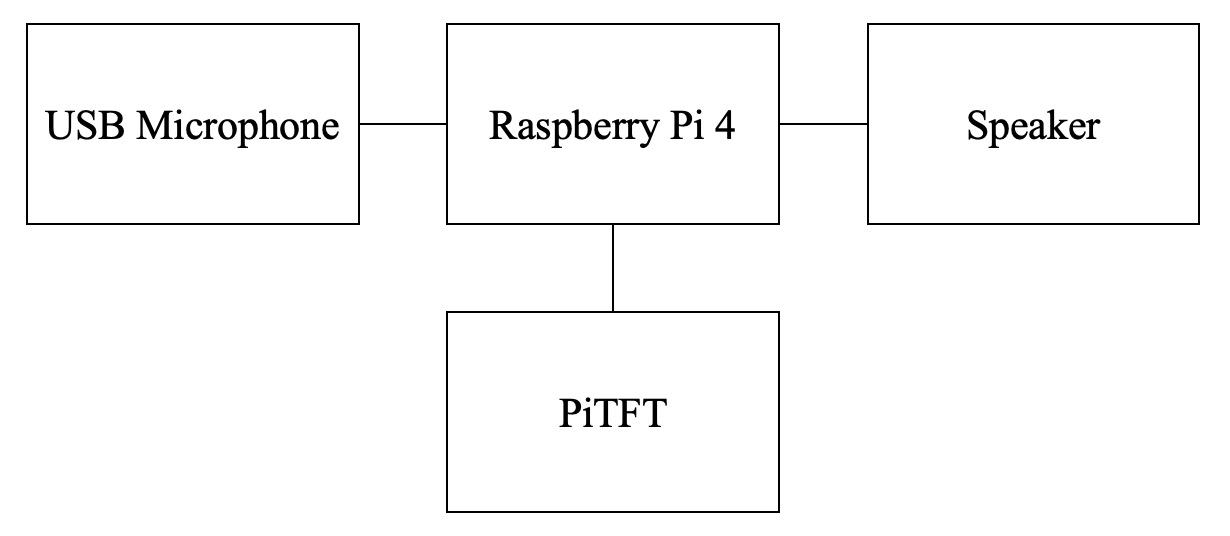

Since the Raspberry Pi 4 does not have an onboard microphone, an external microphone is required for inputting audio data. We employed a Mini USB microphone due to its integrated analog-to-digital converter (ADC), so that we do not need to do the analog-to-digital conversion manually. The hardware connection is shown in Figure 2.

Figure 2. Hardware Connection

As illustrated in Figure 1, once the audio input is acquired, there are two separate methods implemented to process the data: one involves direct processing in the time domain, while the other employs the Python NumPy library to perform Fast Fourier Transform, processes the data in the frequency domain, and then performs inverse Fourier transformation before sending the data to the speaker for output. We have implemented four different sound effects, two of which are achieved by time-domain modifications and the other two by frequency-domain modifications. Note that the microphone keeps receiving inputs while the speaker plays the processed voice outputs, emphasizing on the “real-time” feature of the voice changer.

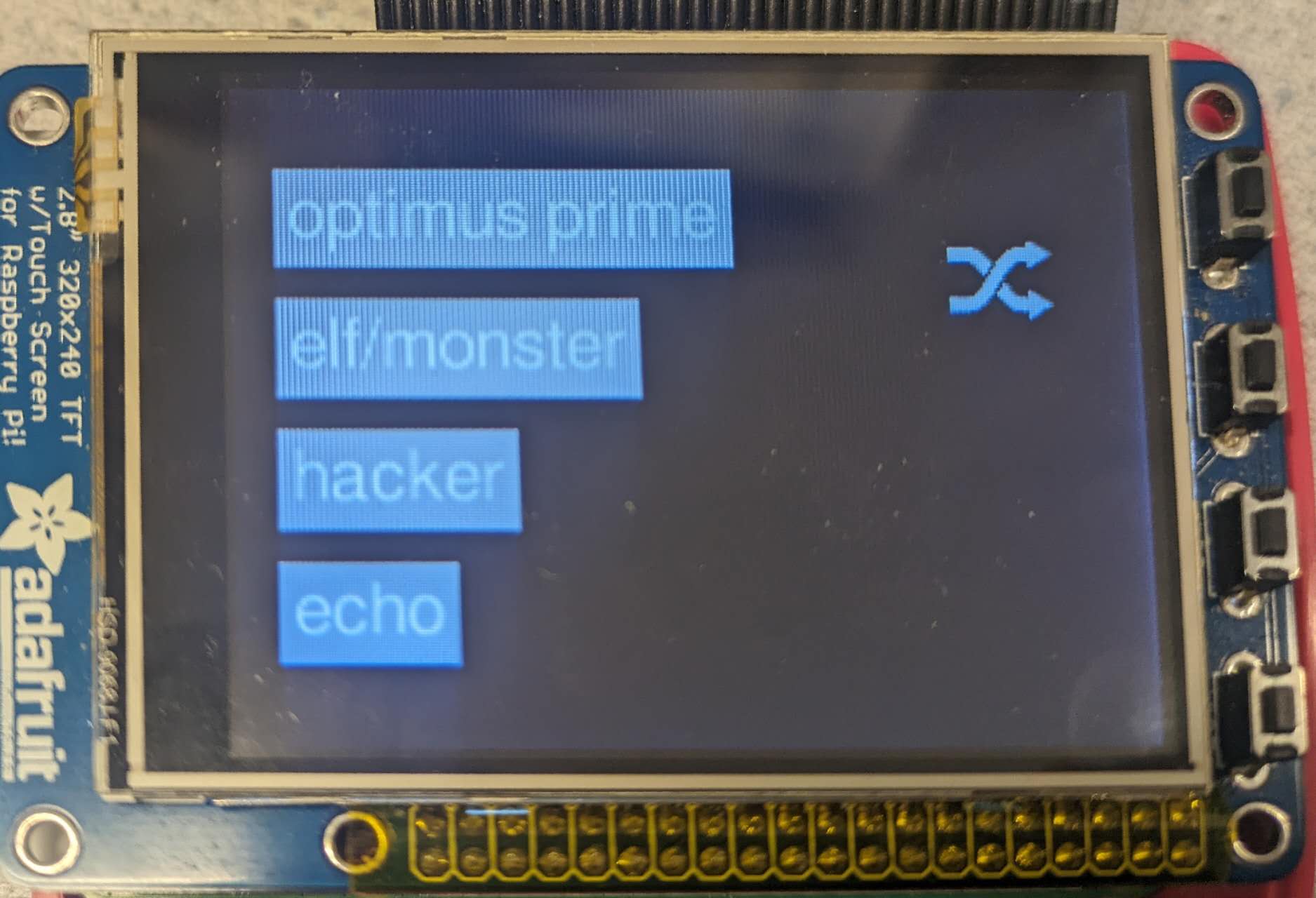

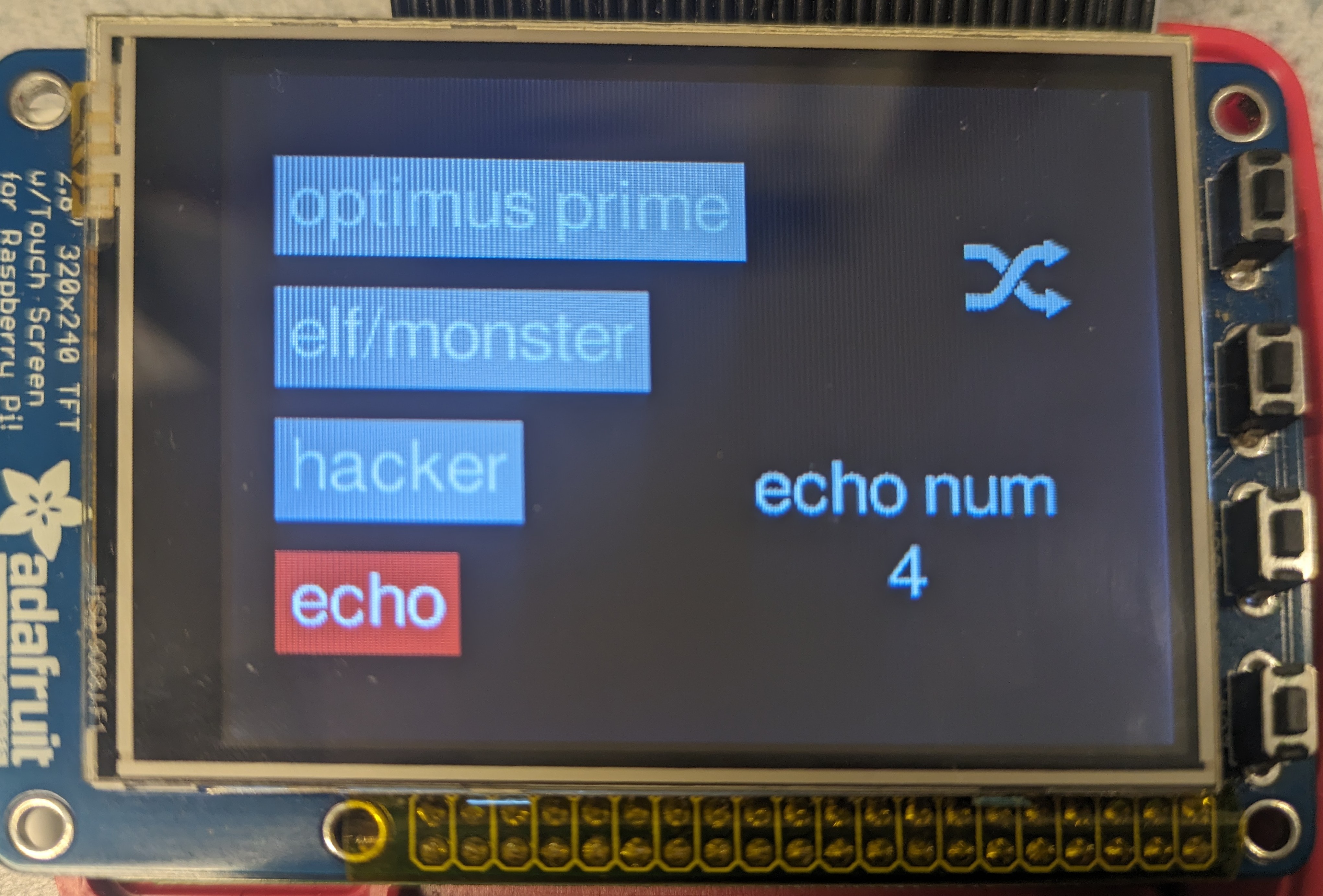

In addition to the characteristics of real-time input-output streaming and the sound processing (which are integrated together), this project includes another significant feature involving the PiTFT display and user interaction interface, implemented with the Python pygame library. When the program is executed, the PiTFT screen displays a menu as shown in Figure 3. It features four sound effects that can be selected by pressing the touchscreen buttons, along with a shuffle button for a random selection among the four effects. After a sound effect mode is selected, the color of the button will be changed to indicate the chosen sound effect.

Figure 3. The initial menu display on PiTFT

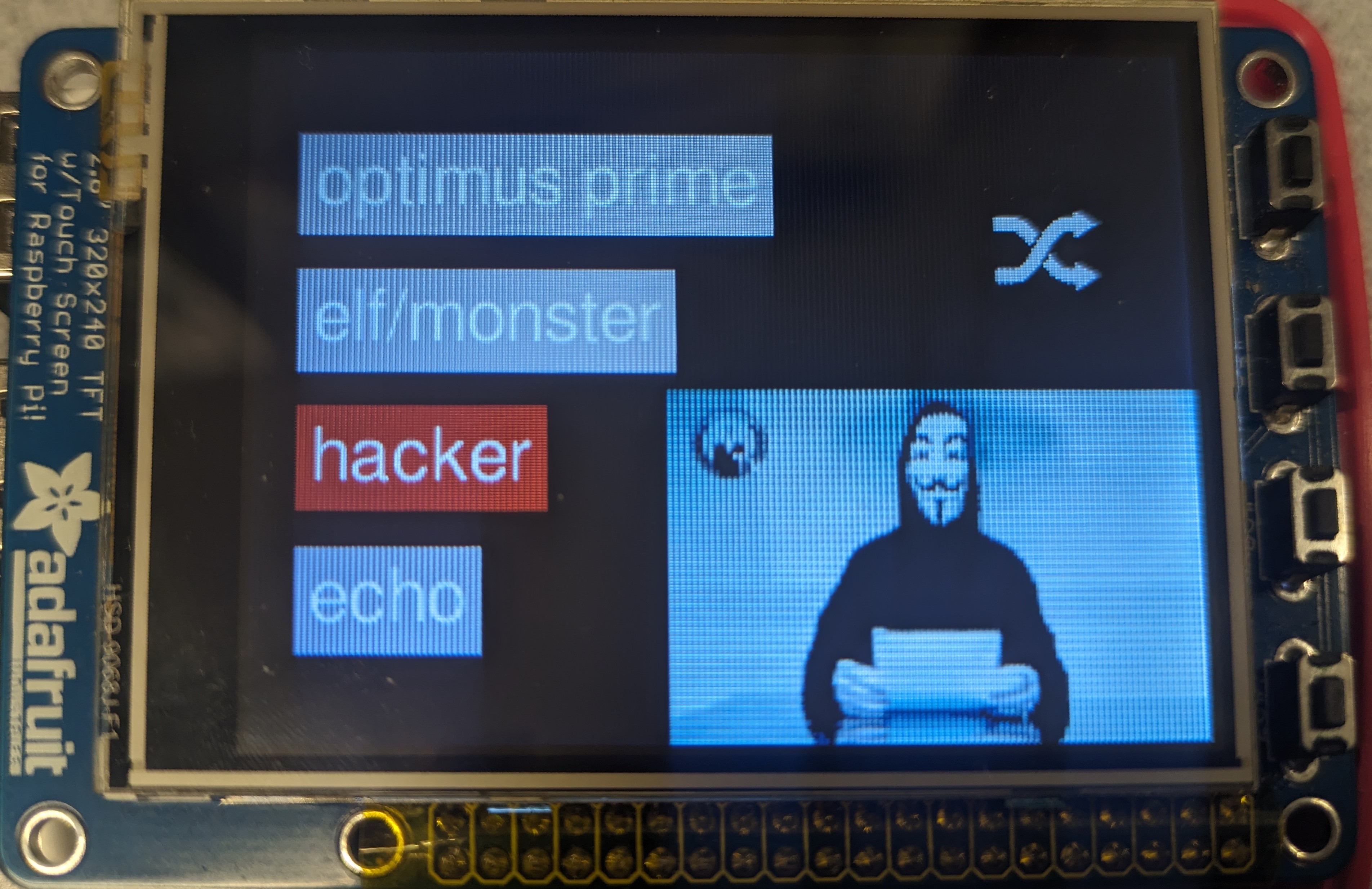

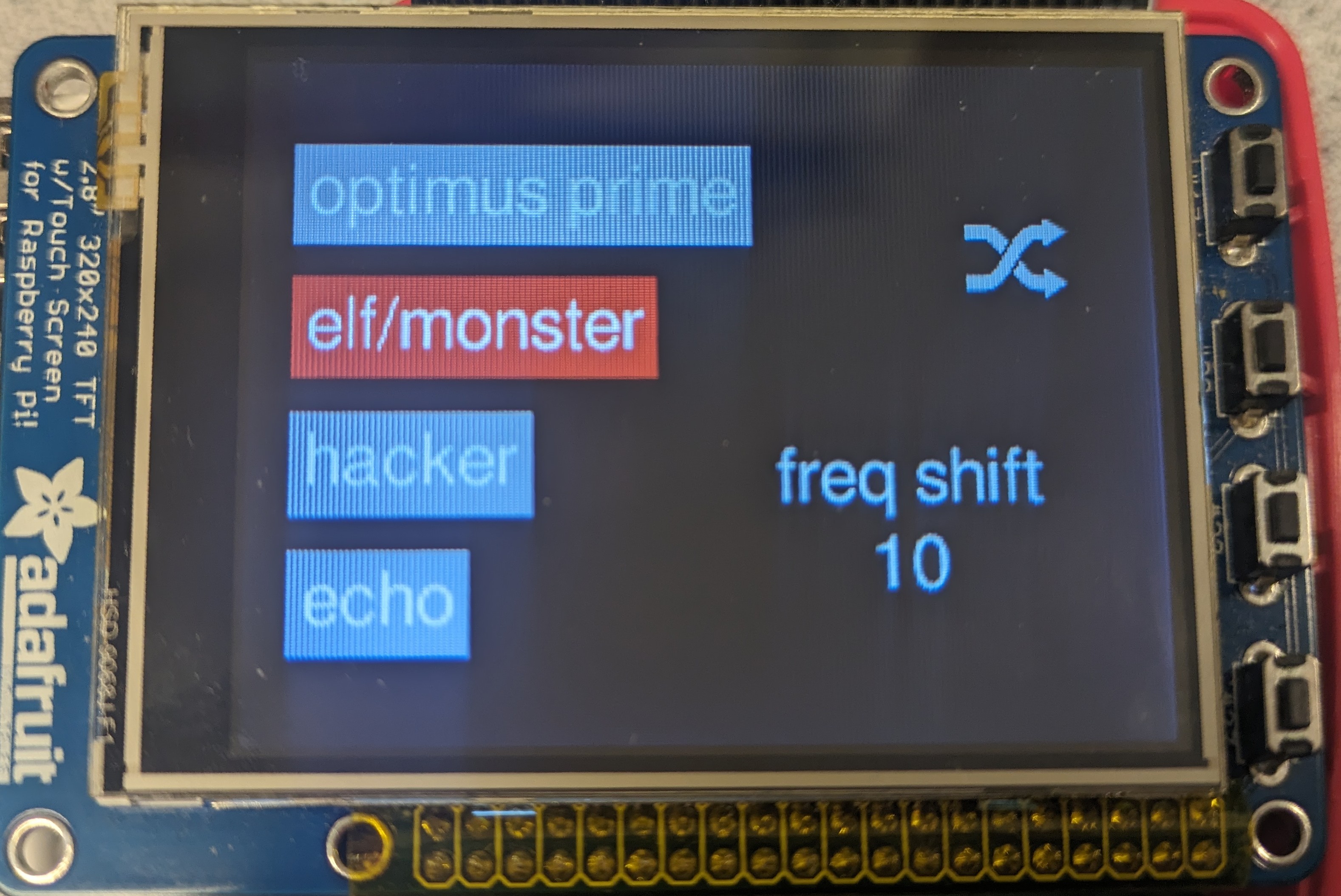

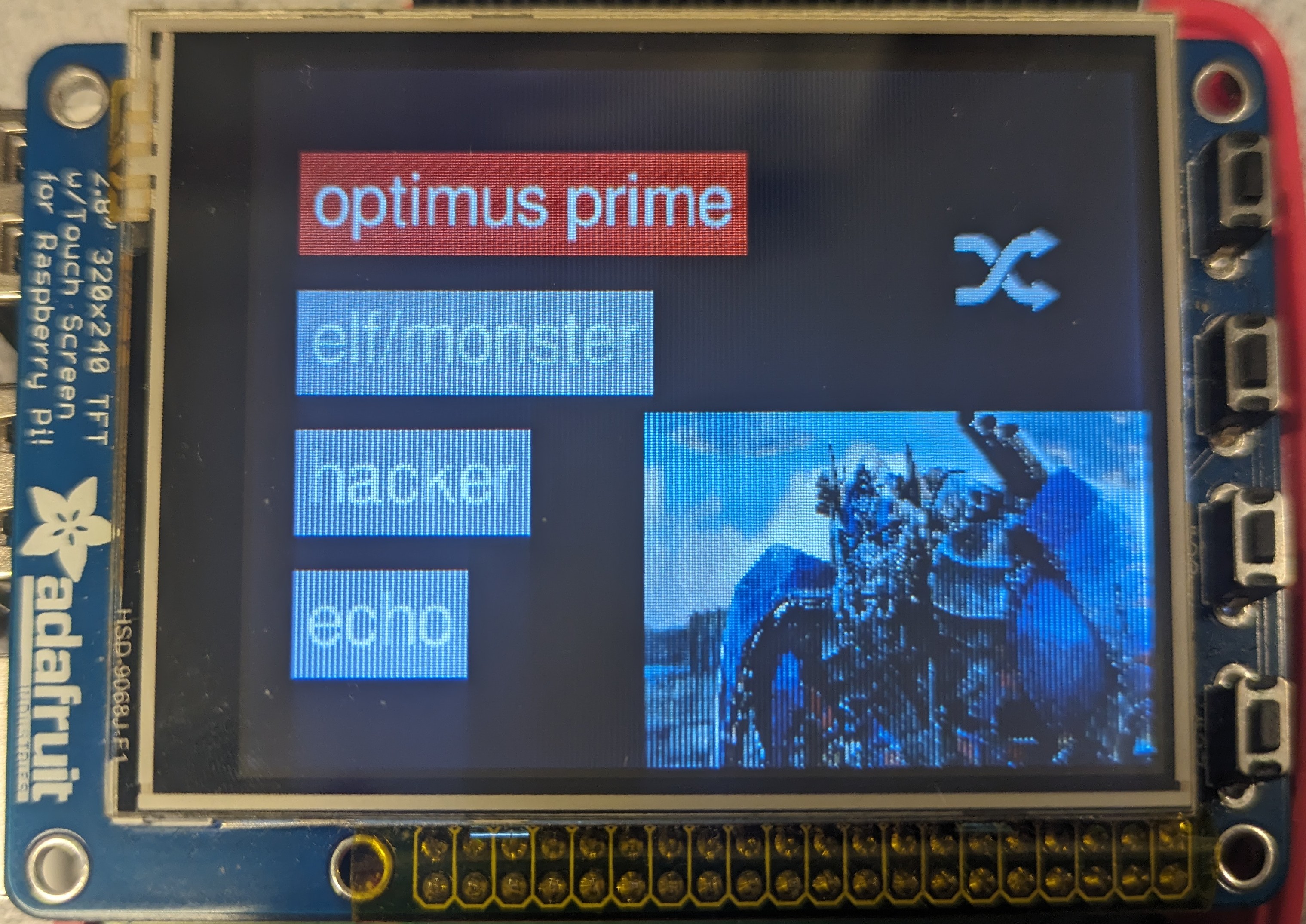

For two of the implemented sound effects (Echo and Optimus Prime), the current voice modulation parameter mode and parameter value are displayed in the bottom right corner of the PiTFT screen (refer to Figure 4). Users can adjust the parameter values by pressing the physical buttons (GPIO17 for increasing and GPIO22 for decreasing), or toggle the parameter mode using GPIO23. For the other two sound effects (Hacker and Elf/Monster), there are no adjustable parameters. Instead, a GIF loops on the PiTFT screen, enhancing the user experience with some visual enjoyment (refer to Figure 5). A bail out button (GPIO27) is also implemented. The details of the sound effects and the parameter effects will be elaborated on in the next section.

Figure 4. Echo sound effect with parameter displayed

Figure 5. Hacker sound effect with a gif animated

Code Organization

In the stage of audio processing using software, since Python will be used, the library PyAudio is utilized to receive data chunk input from the microphone and to play the modified audio through the speaker. Four sound effects are implemented in total and the sound modification involves time as well as frequency domain processing for different effects. Moreover, noise reduction is applied to improve the clarity and quality of the output audio. As mentioned in the previous section, to make the voice changer more interactive, physical buttons and touchscreen user interface are implemented using the pygame library to enable sound effect selection and real-time adjustment of voice modulation parameters.

Since pygame requires 'sudo' to run the program while PyAudio conflicts with this command, two separate python files are needed for breaking down the audio functionalities and the touchscreen interaction functionalities. The communication between these two files is established through two FIFO files, which will be fully illustrated in the following sections. By executing the bash script shown below, the voice-changing functionality can be effectively realized:

% python /home/pi/final_project/real_time_effect_with_button.py &

% sudo python /home/pi/final_project/button_test.py

audio.py

This python file is used to implement different sound effects and output the changed voice into the speaker, and it cannot be run by 'sudo'. Furthermore, it consists of several blocks, including physical buttons, microphone callback function and data sending through FIFO.

a. Thread 1: data_sending

Thread 1 is used to send the value of freq_shift_factor, echo_num and echo_delay to the touchscreen.py file through the FIFO called par_value_fifo, which will be displayed on the PiTFT touch screen, helping users to tune the parameters.

In the FIFO write process, we encountered the issue that sometimes there were broken pipe errors of the FIFO files, and more than one value was stored in the FIFO that had not been read. To debug, we extracted the code related to FIFO read and write from the project codes and directly used the 'echo' command to test FIFO read and 'cat' to test FIFO write functionalities. In this way, we pinpointed the source of the problem more efficiently and observed the error was that it failed to synchronize between the FIFO read and write operations in the two processes.

At first, we thought the errors occurring regarding synchronization were due to the blocking read/write, so we tried non-blocking methods, but the problem still remained. Then we discovered that the blocking method was actually sufficient. The problem was that our thread functionality was too simple, causing the loop to run too frequently, and the FIFO read/write couldn't synchronize within such a short time period. We resolved the issue by adding a time.sleep(0.05) in the thread to extend the duration of the thread loop.

b. Thread 2: freq_shift_up

Thread 2 is used to continuously tune the freq_shift_factor up and down to realize the function that the voice pitch will have a continuous change and the primary use of this thread is to adjust the speed of the frequency change by tuning time.sleep() function. Also, implementing this new thread will not have conflict with the main program when stucking in the FIFO read process.

c. Microphone Callback Function

Initially, we attempted to realize real-time audio play by simultaneously recording and playing two WAV files. However, this approach yielded unsatisfactory results, characterized by noticeable noise that significantly compromised the clarity of the output voice. Consequently, we introduced a voice callback function to solve this issue. This function processes a chunk of data, specifically 2,048 bits, during each iteration, providing nearly real-time output with a delay of less than half a second.

Within the microphone callback function, the input data is initially converted into a Numpy array. Subsequently, after processing in either the time or frequency domain, the data is converted back into a byte stream. Upon initialization, the parameter sound_effect is set to 0, indicating the original unmodified voice.

- Elf/Monster

When the sound_effect is changed to Elf/Monster through pressing a touchscreen button, Fast Fourier Transformation (FFT) is implemented on the input audio data to realize transition to the frequency domain. The function np.roll(rfft_audio_data, freq_shift_factor) is then utilized to shift the pitch higher or lower. And after the modification, the data is reverted to the time domain through inverse FFT before being played through the speaker, while time-domain modification only requires converting the data into byte stream.

- Echo

Additionally, when the Echo effect is selected, the audio history from several previous loops is stored in an large array. This historical audio is played with a slight reduction in the current callback function, creating an echo effect. The number of loops ahead of the current loop is determined by the echo_delay parameter, while the number of echoes, echo_num, is controlled by GPIO17 and GPIO22 buttons.

- Optimus Prime

The third sound effect, Optimus Prime, is achieved by adding the voices of several previous loops, creating an overlap in the output data.

- Hacker

Finally, the Hacker effect involves the addition of five different pitches, modified in the frequency domain by freq_shift_factor values of -30, -20, -10, 10, 20, and 30.

With all the modifications implemented, there are quantities of noticeable noises, so they should be mitigated by some filters based on certain performance. At first, we were considering applying a low-pass butterworth filter to reduce the noise when the audio data stream is converted back to the time domain after frequency modification. However, this approach resulted in an increase in unwanted noise. Later, we adopted a simpler method by applying a window on the spectrum to filter out lower or higher frequency noises corresponding to the spectrum modification (shifting left or right). It is proved that This approach is more effective in implementing noise reduction on the output audio.

d. Button Callback Functions

Most significantly, a physical bail out button is required which is set by GPIO27, for we need to exit all the programs when shutting down the device. At first, we just set code_run and thread_run to False, and in this case, the main program loop could not be quit, since the blocking FIFO read was implemented in the main loop. If there was no data written into the FIFO by the python file, the main loop would be stuck in the FIFO read and the value of code_run which was changed to False by the bail out button could not be detected, so the main program was not able to exit. Therefore, the GPIO27 button was later modified by adding the FIFO write commands.

with open(par_value_fifo_path, 'w') as fifo:

fifo.write('')

fifo.flush()

Next, GPIO17 and GPIO22 are used to increase or decrease the values of frequency shift factor, echo number and echo delay. When the sound effect is equal to Elf/Monster, the two buttons are used to increase and decrease the freq_shift_factor ranging from -30 to 30, which indicates how many steps the audio data in frequency domain shifts to right or left, namely the tone that is tuned higher or lower. Also, a new button GPIO23 is applied to turn on the mode of continuously shifting the frequency in that range. In addition, when the sound effect is chosen as echo, GPIO17 and GPIO22 are used to increase or decrease echo number and delay, while GPIO23 is used to switch mode between adjusting echo number or echo delay.

e. Main Program

In the main program, all the button callback functions is initialized, and the audio callback function is set by the following code:

p = pyaudio.PyAudio()

stream = p.open(format=pyaudio.paFloat32,

channels=CHANNELS,

rate=RATE,

output=True,

input=True,

stream_callback=callback)

# Start the stream

stream.start_stream()

Additionally, in the main loop, the sound effect control parameter is sent through the FIFO called sound_effect_fifo, so when stream.is_active() & code_run is True, the python file can continuously read the data that is sent to the FIFO by touchscreen.py.

touchscreen.py

This python file implements the functionality of PiTFT display and touchscreen user interface. With the pygame library imported, the file must be run with the 'sudo' command. This code file is mainly composed of these parts: the callback function for the physical bail out button, two threads for writing/reading FIFO files respectively, and the main function that includes the PiTFT display and touchscreen control features.

a. Thread 1: fifo_write()

As indicated by the name, this thread writes data into a FIFO called sound_effect_fifo, which is synchronously read by audio.py, thereby completing the data transfer between the two processes via FIFO. The data transmitted in this FIFO is an integer ranging from 1 to 4, representing four different sound effects, which is determined by user operations on the PiTFT touchscreen and is then passed to audio.py for corresponding audio processing.

b. Thread 2: fifo_read()

This thread reads data from a FIFO called par_value_fifo, which is synchronously written into by audio.py. The data transmitted in this FIFO follows the format of “{integer}_{integer}” and is applicable only to the Echo and Elf/Monster sound effects, as these are the sound effects that allow users to adjust parameters. The first integer can have a value of 1 or 2, corresponding to two different adjustable parameter modes (e.g., echo_num and echo_delay for Echo). The second integer represents the corresponding parameter value, and the two integers are separated by an underscore. In this thread, the string obtained from the FIFO is processed using the split function to get the values of the two integers. These values are then accessed in the main function for the display of corresponding contents on the PiTFT.

c. Button Callback Function

Similar to audio.py, touchscreen.py also requires a physical bail-out button. GPIO 27 is concurrently set as the bail-out button for both of these Python codes, as these two programs will run simultaneously, and we want to achieve the ability to exit the entire voice changer application with the press of a single button. As described in the previous text, when the GPIO 27 button is pressed, the program enters the callback function, where the variables code_run and thread_run are set to False to terminate the corresponding while loop. Additionally, an empty string is written into the sound_effect_fifo to prevent the program from getting stuck at the FIFO write in another thread and not being able to terminate.

d. The Main Function

Besides setting up an event detection for the GPIO pin, configuring threads, and performing necessary variable initialization, the main code is primarily responsible for all the display and touchscreen controls on the PiTFT.

For the User Interface drawing, the code uses the pygame library to draw buttons on the screen, including their backgrounds and text (refer to Figure 3). Button colors change based on whether a particular sound effect has been selected. For the Elf/Monster and Echo sound effects, the screen displays the current adjustable parameter name and value (refer to Figure 4 and Figure 6) obtained from the fifo_read thread. If the selected sound effect is Hacker or Optimus Prime, a corresponding GIF is animated on the PiTFT screen (refer to Figure 5 and Figure 7).

Figure 6. Elf/Monster sound effect with parameter displayed

Figure 7. Optimus Prime sound effect with a gif animated

Due to the limitation of the pygame version, we could not directly use pygame to play GIFs on the screen. The approach we eventually used was decomposing the GIF frame by frame, sequentially displaying them, employing a variable called gif_frame_count for looping through the frames.

The code also detects touch screen button presses through pygame's event handling mechanism. When the user presses the PiTFT screen, the pressed coordinates are recorded and compared with the button coordinates to check if it is a button press. When it is, the corresponding sound effect is set, and clicking the “shuffle” button triggers the selection of a random sound effect.

Results

Optimus Prime

Original Voice

Modified Voice

Elf/Monster

Original Voice

Modified Voice: Monster

Modified Voice: Elf

Echo

Original Voice

Modified Voice

Hacker

Original Voice

Modified Voice

Conclusions

For this project, the objective of implementing the “real-time” voice changer has been achieved with four sound effects, including Optimus Prime, Elf/Monster, Echo and Hacker. Pattern transitions can be initiated through the touch buttons shown on the PiTFT screen while the parameters adjustments are facilitated using the physical buttons (GPIO 17, 22, 23). What's more, a bail out button on GPIO 27 was implemented.

In order to make the system “embedded”, we tried to use the crontab command to enable it to start automatically on boot so that we don't need extra peripherals such as a keyboard to launch the program. However, though our bash script could run smoothly when executed manually from any directory, crontab caused errors when the Raspberry Pi was rebooted. Unfortunately, we were not able to identify the root cause of this issue eventually.

Overall, this is an interesting project that successfully achieved its initial goals. We applied knowledge acquired from lectures and labs into this project, including the utilization of the PiTFT touchscreen, threads, GPIO buttons and crontab, etc.

Future Work

If we had more time to work on the project, we would expand the range of sound effects to include options like telephone, underwater, melody, and others. Additionally, we would enhance the audio quality by applying more advanced noise reduction filters to make the output audio more audible and clearer.

Also, it would be great if we could add more visual effects to the voice changer. We would use an external monitor as an extended display for visual elements like the gifs, and potentially add more animations. This configuration would enable our voice changer to simulate voice modulation synchronized with the content displayed on the monitor, acting as a “voice-over” for the gifs or animations presented on the screen.

Work Distribution

Wenyi Fu

wf223@cornell.edu

Worked with Kaiyuan to implement, test and debug real-time audio stream

Implementation of Elf/Monster and Hacker effects

Wrote code for sound effects and audio playback, audio.py

Kaiyuan Xu

kx74@cornell.edu

Worked with Wenyi to implement, test and debug real-time audio stream

Implementation of Echo and Optimus Prime effects

Wrote code for PiTFT display and touchscreen control, touchscreen.py

Parts List

- Raspberry Pi $35.00 - provided in lab

- PiTFT $44.95 - Provided in lab

- USB microphone $5.95 - Provided in lab

- Speakers - Provided in lab

- Keyboard - Provided in lab

Total: $85.9

References

Real-Time Audio Signal Processing Using PythonUSB Microphone Setting

SciPy FFT

Numpy Frequency Shift

PyAudio Documentation

FIFO Data Transmission

Visual FFT

Code Appendix

voice_changer.sh

#!/bin/bash python /home/pi/final_project/audio.py & sudo python /home/pi/final_project/touchscreen.py

audio.py

# By Wenyi Fu (wf223), Kaiyuan Xu (kx74) # Final Project - Voice Changer ############################################################################ ################# Import Libraries and Initialize GPIO ##################### ############################################################################ import pyaudio import numpy as np import time import os import RPi.GPIO as GPIO from scipy import signal from scipy.fftpack import fft, rfft, irfft from enum import Enum from threading import Thread import subprocess GPIO.setmode(GPIO.BCM) # Set for GPIO numbering not pin numbers GPIO.setup(27,GPIO.IN,pull_up_down=GPIO.PUD_UP) # Bail out button GPIO.setup(17,GPIO.IN,pull_up_down=GPIO.PUD_UP) # Button to increase freq GPIO.setup(22,GPIO.IN,pull_up_down=GPIO.PUD_UP) # Button to decrease freq GPIO.setup(23,GPIO.IN,pull_up_down=GPIO.PUD_UP) # Button to change effect of echo and freq ############################################################################ ################### Button Callback Functions ############################## ############################################################################ # Button to quit the program def GPIO27_callback(channel): global code_run global thread_run code_run = False thread_run = False global freq_shift_factor with open(par_value_fifo_path, 'w') as fifo: fifo.write('') fifo.flush() # Button to increase freq def GPIO17_callback(channel): global freq_shift_factor global sound_effect global echo_effect global echo_delay_par global echo_num_par global echo_num global echo_delay if (sound_effect == Effects.ELF.value): freq_shift_factor = freq_shift_factor + 5 if (freq_shift_factor > 30): freq_shift_factor = 30 elif (sound_effect == Effects.ECHO.value): if (echo_effect == echo_num_par): echo_num = echo_num + 1 if (echo_num > 20): echo_num = 20 if (echo_effect == echo_delay_par): echo_delay = echo_delay + 10 # Button to decrease freq def GPIO22_callback(channel): global freq_shift_factor global echo_num global echo_delay global sound_effect global echo_effect global echo_num_par global echo_delay_par if (sound_effect == Effects.ELF.value): freq_shift_factor = freq_shift_factor - 5 if (freq_shift_factor < -30): freq_shift_factor = -30 elif (sound_effect == Effects.ECHO.value): if (echo_effect == echo_num_par): echo_num = echo_num - 1 if (echo_num < 0): echo_num = 0 if (echo_effect == echo_delay_par): echo_delay = echo_delay - 10 if (echo_delay < 0): echo_delay = 0 # Button to start continuously shift freq def GPIO23_callback(channel): global continue_freq_on global echo_effect global sound_effect global echo_num_par global echo_delay_par if (sound_effect == Effects.ELF.value): if (continue_freq_on == 0): continue_freq_on = 1 else: continue_freq_on = 0 if (sound_effect == Effects.ECHO.value): if (echo_effect == echo_num_par): echo_effect = echo_delay_par else: echo_effect = echo_num_par ############################################################################ ########################## Thread Definition ############################### ############################################################################ # Thread to send data of freq shift and echo num/delay def DATA_SENDING(): global thread_run global freq_shift_factor global sound_effect global echo_delay global echo_num global echo_effect global echo_num_par thread_run = True while thread_run: # print("thread entered") with open(par_value_fifo_path, 'w') as fifo: if (sound_effect == Effects.ELF.value): fifo.write(str(freq_shift_factor)) elif (sound_effect == Effects.ECHO.value): # Determine which echo effect to send print('echo_effect' ,echo_effect) if (echo_effect == echo_num_par): fifo.write('num_'+ str(echo_num)) else: fifo.write('delay_'+ str(echo_delay)) fifo.flush() time.sleep(0.05) # Thread to continuously shift the freq def FREQ_SHIFT_UP_DOWN(): global thread_run global continue_freq_on global freq_shift_factor global incre while thread_run: # Turn on/off the continue freq shift if (continue_freq_on): freq_shift_factor = freq_shift_factor + incre if (freq_shift_factor > 30): incre = incre * -1 elif (freq_shift_factor < -30): incre = incre * -1 time.sleep(0.1) ############################################################################ ####################### FIFO Path Definition ############################### ############################################################################ directory_path = "/home/pi/final_project" par_value_fifo_path = "/home/pi/final_project/par_value_fifo" sound_effect_fifo_path = "/home/pi/final_project/sound_effect_fifo" # fifo for control the sound effect type if not os.path.exists(sound_effect_fifo_path): os.mkfifo(sound_effect_fifo_path) # fifo for sending the data to display current freq and encho number if not os.path.exists(par_value_fifo_path): os.mkfifo(par_value_fifo_path) subprocess.run(["sudo", "chmod", "o+w", directory_path]) subprocess.run(["sudo", "chmod", "o+w", par_value_fifo_path]) subprocess.run(["sudo", "chmod", "o+w", sound_effect_fifo_path]) ############################################################################ ########################## Parameter Definition ############################ ############################################################################ # Parameters for the microphone input CHANNELS = 2 RATE = 44100 pitch_factor = 0.5 # Parameters to implement elf/monster effect freq_shift_factor = 15 incre = 1 continue_freq_on = 0 # Parameters to control the sound effect sound_effect_previous = 0 sound_effect = 0 class Effects(Enum): ELF = 1 ECHO = 2 ROBOT = 3 ALIEN = 4 # Parameter to control echo effect audio_history = [] amp_factor = 0.5 echo_num = 4 echo_delay = 50 echo_delay_par = 0 echo_num_par = 1 echo_effect = echo_num_par # Initialize ############################################################################ ############### Microphone and Speaker Callback Function ################## ############################################################################ def callback(in_data, frame_count, time_info, flag): global freq_shift_factor global echo_num global echo_delay # global amp_modify # using Numpy to convert to array for processing audio_data = np.frombuffer(in_data, dtype=np.float32) if (sound_effect == Effects.ELF.value): # Time domain -> Frequency domain rfft_audio_data = rfft(audio_data) # Acceptable range: -30 ~ 30 shifted_rfft_audio_data = np.roll(rfft_audio_data, freq_shift_factor) # Reduce the noise if (freq_shift_factor > 0): shifted_rfft_audio_data[:(freq_shift_factor+20)] = 0 if (freq_shift_factor < 0): shifted_rfft_audio_data[(2048+freq_shift_factor-20):] = 0 # Frequency domain -> Time domain irfft_audio_data = irfft(shifted_rfft_audio_data) # Convert the data back into byte stream changed_data = irfft_audio_data.tobytes() elif (sound_effect == Effects.ECHO.value): if (sound_effect_previous != Effects.ECHO.value): audio_history.clear() len_audio_history = echo_delay # Initialization: fill in the history array if (len(audio_history) < len_audio_history): audio_history.append(audio_data) else: audio_history.pop(0) audio_history.append(audio_data) output_data = 0 # Add echoes if (len(audio_history) == len_audio_history): for i in range(echo_num): output_data += audio_history[int(len_audio_history*(i/echo_num))] * amp_factor * (i+1)/echo_num # Add the current input audio data output_data += audio_history[-1] # Output data flow # Convert the data back into byte stream changed_data = output_data.tobytes() elif (sound_effect == Effects.ROBOT.value): if (sound_effect_previous != Effects.ROBOT.value): audio_history.clear() len_audio_history = 5 # Initialization: fill in the history array if (len(audio_history) < len_audio_history): audio_history.append(audio_data) else: audio_history.pop(0) audio_history.append(audio_data) # Could also append shifted data output_data = 0 for i in range(len(audio_history)): output_data += audio_history[i] * (len(audio_history)-i) / len(audio_history) # Convert the data back into byte stream changed_data = output_data.tobytes() elif (sound_effect == Effects.ALIEN.value): # Time domain -> Frequency domain rfft_audio_data = rfft(audio_data) # Add low and high components low1 = np.roll(rfft_audio_data, -30) low2 = np.roll(rfft_audio_data, -20) low3 = np.roll(rfft_audio_data, -10) low4 = np.roll(rfft_audio_data, -25) low5 = np.roll(rfft_audio_data, -15) rfft_audio_data_add = low1 + low2 + low3 + low4 + low5 # Reduce the noise rfft_audio_data_add[(2048 -10):] = 0 # Frequency domain -> Time domain irfft_audio_data = irfft(rfft_audio_data_add) # Convert the data back into byte stream changed_data = irfft_audio_data.tobytes() else: # Time domain -> Frequency domain rfft_audio_data = rfft(audio_data) # Frequency domain -> Time domain irfft_audio_data = irfft(rfft_audio_data) # Convert the data back into byte stream changed_data = irfft_audio_data.tobytes() return changed_data, pyaudio.paContinue ############################################################################## ############################ Main Code ###################################### ############################################################################## # Define the callback event GPIO.add_event_detect(27,GPIO.FALLING,callback=GPIO27_callback,bouncetime=300) GPIO.add_event_detect(17,GPIO.FALLING,callback=GPIO17_callback,bouncetime=300) GPIO.add_event_detect(22,GPIO.FALLING,callback=GPIO22_callback,bouncetime=300) GPIO.add_event_detect(23,GPIO.FALLING,callback=GPIO23_callback,bouncetime=300) # Start the Thread t1 = Thread(target=DATA_SENDING) t2 = Thread(target=FREQ_SHIFT_UP_DOWN) t1.start() t2.start() # Initialize the main code and the thread code_run = True # Open the sound stream p = pyaudio.PyAudio() stream = p.open(format=pyaudio.paFloat32, channels=CHANNELS, rate=RATE, output=True, input=True, stream_callback=callback) stream.start_stream() # Loop to read from the sound effect control fifo while (stream.is_active() & code_run): time.sleep(0.1) # Blocking read with open(sound_effect_fifo_path, 'r') as fifo: data = fifo.readline().strip() if (data): sound_effect_previous = sound_effect sound_effect = int(data) # print(data) # Stop and close the stream stream.stop_stream() print("Stream is stopped") stream.close() p.terminate() # End the thread t1.join() t2.join() GPIO.cleanup() # Clean the GPIO pin

touchscreen.py

# By Wenyi Fu (wf223), Kaiyuan Xu (kx74) # Final Project - Voice Changer ############################################################################ ################# Import Libraries and Initialize GPIO ##################### ############################################################################ from pygame.locals import * # for event MOUSE variables import RPi.GPIO as GPIO from threading import Thread from enum import Enum import pygame import time import os import random # Bail out button GPIO.setmode(GPIO.BCM) # Set for GPIO numbering not pin numbers GPIO.setup(27,GPIO.IN,pull_up_down=GPIO.PUD_UP) # Bail out button ############################################################################ ################### Button Callback Functions ############################## ############################################################################ def GPIO27_callback(channel): global code_run global thread_run code_run = False thread_run = False # If the bail out button is pressed, write something into the fifo # so that it doesn't get stuck there with open(sound_effect_fifo_path, 'w') as fifo: fifo.write(str('')) fifo.flush() ############################################################################ ####################### FIFO Path Definition ############################### ############################################################################ # Create the fifo # For sound effect mode sound_effect_fifo_path = "/home/pi/final_project/sound_effect_fifo" if not os.path.exists(sound_effect_fifo_path): os.mkfifo(sound_effect_fifo_path) # For parameter value par_value_fifo_path = "/home/pi/final_project/par_value_fifo" if not os.path.exists(par_value_fifo_path): os.mkfifo(par_value_fifo_path) ############################################################################ ########################## Parameter Declaration ########################### ############################################################################ # Environmental parameters os.putenv('SDL_VIDEODRIVER','fbcon') # Display on piTFT os.putenv('SDL_FBDEV','/dev/fb0') os.putenv('SDL_MOUSEDRV', 'TSLIB') # Track mouse clicks on piTFT os.putenv('SDL_MOUSEDEV', '/dev/input/touchscreen') # Initialize the pygame pygame.init() pygame.mouse.set_visible(False) # Control the mouse visibility, set it to invisible white = 255, 255, 255 black = 0, 0, 0 red = 255, 0, 0 green = 0, 255, 0 gray = 205, 193, 197 dark_red = 210, 0, 0 size = width,height = 320,240 screen = pygame.display.set_mode(size) # Set to PiTFT screen size # Initialize a mouse position that would not collide with the buttons pos = -1,-1 # Sound effects sound_effect = 0 class Effects(Enum): ELF = 1 ECHO = 2 ROBOT = 3 ALIEN = 4 ############################################################################ ######################### FIFO Read/Write Threads ########################## ############################################################################ # Thread for writing into the fifo to change the sound effect def fifo_write(): global thread_run global sound_effect while thread_run: # Blocking write with open(sound_effect_fifo_path, 'w') as fifo: fifo.write(str(sound_effect)) fifo.flush() time.sleep(0.05) # Thread for reading from the fifo def fifo_read(): global thread_run global sound_effect_par_mode global sound_effect_par_value while thread_run: with open(par_value_fifo_path, 'r') as fifo: data = fifo.readline().strip() if (data): data_split = data.split("_") if (len(data_split) == 1): # No mode selection: just a par value sound_effect_par_value = int(data_split[0]) elif (len(data_split) == 2): # Mode + par value e.g. "delay_5" for echoing effect sound_effect_par_mode = data_split[0] sound_effect_par_value = int(data_split[1]) ############################################################################## ############################ Main Code ###################################### ############################################################################## # Bail out button code_run = True thread_run = True GPIO.add_event_detect(27,GPIO.FALLING,callback=GPIO27_callback,bouncetime=300) # Start the Thread t1 = Thread(target=fifo_write) t2 = Thread(target=fifo_read) t1.start() t2.start() # State Machine Variable for Debouncing last_pressed = 1 # For the menu buttons font = pygame.font.SysFont("Arial", 25) buttons = {'optimus prime':(20,35), 'elf/monster':(20,83), 'hacker':(20,131), 'echo':(20,179)} buttons_rect = {} # Create a rect dictionary sound_effect_name = {Effects.ELF.value: 'elf/monster', Effects.ROBOT.value: 'optimus prime', Effects.ECHO.value: 'echo', Effects.ALIEN.value: 'hacker'} # For the shuffle icon and gif display icon = pygame.transform.scale(pygame.image.load("/home/pi/final_project/shuffle_icon.png"),(40,40)) icon_rect = icon.get_rect(center=(270,70)) gif_size = 180,120 gif_pos = 140,120 # Hacker gif - frame by frame hacker_f1 = pygame.transform.scale(pygame.image.load_extended("/home/pi/final_project/frame-1.gif"),gif_size) hacker_f1_rect = hacker_f1.get_rect(topleft=gif_pos) hacker_f2 = pygame.transform.scale(pygame.image.load_extended("/home/pi/final_project/frame-2.gif"),gif_size) hacker_f2_rect = hacker_f2.get_rect(topleft=gif_pos) hacker_f3 = pygame.transform.scale(pygame.image.load_extended("/home/pi/final_project/frame-3.gif"),gif_size) hacker_f3_rect = hacker_f3.get_rect(topleft=gif_pos) hacker_f4 = pygame.transform.scale(pygame.image.load_extended("/home/pi/final_project/frame-4.gif"),gif_size) hacker_f4_rect = hacker_f4.get_rect(topleft=gif_pos) hacker_f5 = pygame.transform.scale(pygame.image.load_extended("/home/pi/final_project/frame-5.gif"),gif_size) hacker_f5_rect = hacker_f5.get_rect(topleft=gif_pos) hacker_f6 = pygame.transform.scale(pygame.image.load_extended("/home/pi/final_project/frame-6.gif"),gif_size) hacker_f6_rect = hacker_f6.get_rect(topleft=gif_pos) gif_hacker = [hacker_f1, hacker_f2, hacker_f3, hacker_f4, hacker_f5, hacker_f6] gif_hacker_rect = [hacker_f1_rect, hacker_f2_rect, hacker_f3_rect, hacker_f4_rect, hacker_f5_rect, hacker_f6_rect] # Optimus Prime gif - frame by frame robot_f1 = pygame.transform.scale(pygame.image.load_extended("/home/pi/final_project/frame-01.gif"),gif_size) robot_f1_rect = robot_f1.get_rect(topleft=gif_pos) robot_f2 = pygame.transform.scale(pygame.image.load_extended("/home/pi/final_project/frame-02.gif"),gif_size) robot_f2_rect = robot_f2.get_rect(topleft=gif_pos) robot_f3 = pygame.transform.scale(pygame.image.load_extended("/home/pi/final_project/frame-03.gif"),gif_size) robot_f3_rect = robot_f3.get_rect(topleft=gif_pos) robot_f4 = pygame.transform.scale(pygame.image.load_extended("/home/pi/final_project/frame-04.gif"),gif_size) robot_f4_rect = robot_f4.get_rect(topleft=gif_pos) robot_f5 = pygame.transform.scale(pygame.image.load_extended("/home/pi/final_project/frame-05.gif"),gif_size) robot_f5_rect = robot_f5.get_rect(topleft=gif_pos) robot_f6 = pygame.transform.scale(pygame.image.load_extended("/home/pi/final_project/frame-06.gif"),gif_size) robot_f6_rect = robot_f6.get_rect(topleft=gif_pos) gif_robot = [robot_f1, robot_f2, robot_f3, robot_f4, robot_f5, robot_f6] gif_robot_rect = [robot_f1_rect, robot_f2_rect, robot_f3_rect, robot_f4_rect, robot_f5_rect, robot_f6_rect] # For looping through the gif frames gif_frame_count = 0 # For the parameter display par_name_pos = (230,150) par_value_pos = (230,180) sound_effect_par_value = 0 sound_effect_par_mode = 0 while code_run: time.sleep(0.1) # Erase the screen screen.fill(black) # Draw the buttons for my_text, text_pos in buttons.items(): text_surface = font.render(my_text, True, white) rect = text_surface.get_rect(topleft=text_pos) # Draw the button background rect_button = rect.inflate(10, 10) if (sound_effect != 0): # Current sound effect if (sound_effect_name[sound_effect] == my_text): # The pressed button pygame.draw.rect(screen, dark_red, rect_button) else: pygame.draw.rect(screen, gray, rect_button) else: # All buttons has not been pressed in the initial state pygame.draw.rect(screen, gray, rect_button) # Draw the button text screen.blit(text_surface, rect) # Save rect for 'my_text' button for checking screen touches buttons_rect[my_text] = rect # Draw the randomize button screen.blit(icon, icon_rect) # For looping the gif if (gif_frame_count == 6): gif_frame_count = 0 # Display the sound effect parameter/gif if (sound_effect == Effects.ELF.value): text_surface = font.render("freq shift", True, white) rect = text_surface.get_rect(center=par_name_pos) screen.blit(text_surface, rect) text_surface = font.render(str(sound_effect_par_value), True, white) rect = text_surface.get_rect(center=par_value_pos) screen.blit(text_surface, rect) elif (sound_effect == Effects.ECHO.value): if (sound_effect_par_mode == "num"): text_surface = font.render("echo num", True, white) rect = text_surface.get_rect(center=par_name_pos) screen.blit(text_surface, rect) elif (sound_effect_par_mode == "delay"): text_surface = font.render("echo delay", True, white) rect = text_surface.get_rect(center=par_name_pos) screen.blit(text_surface, rect) text_surface = font.render(str(sound_effect_par_value), True, white) rect = text_surface.get_rect(center=par_value_pos) screen.blit(text_surface, rect) elif (sound_effect == Effects.ALIEN.value): screen.blit(gif_hacker[gif_frame_count], gif_hacker_rect[gif_frame_count]) gif_frame_count += 1 elif (sound_effect == Effects.ROBOT.value): screen.blit(gif_robot[gif_frame_count], gif_robot_rect[gif_frame_count]) gif_frame_count += 1 pygame.display.flip() # Detect touch screen button press for event in pygame.event.get(): if (event.type is MOUSEBUTTONUP): # If no touch, reset the pos value pos = -1,-1 last_pressed = 1 elif (event.type is MOUSEBUTTONDOWN): # If touched, store the position value if (last_pressed==1): pos = pygame.mouse.get_pos() pygame.display.flip() last_pressed = 0 # Reset the parameter, ensuring that the touched position will only be stored once for (my_text, rect) in buttons_rect.items(): # for saved button rects if (rect.collidepoint(pos)): if (my_text == 'elf/monster'): # If elf/monster button pressed sound_effect = Effects.ELF.value if (my_text == 'echo'): # If echo button pressed sound_effect = Effects.ECHO.value if (my_text == 'optimus prime'): # If optimus prime button pressed sound_effect = Effects.ROBOT.value if (my_text == 'hacker'): # If alien button pressed sound_effect = Effects.ALIEN.value # When the randomize button is pressed, choose randomly a sound effect other than the current one if icon_rect.collidepoint(pos): sound_effect = random.choice([effect.value for effect in Effects if effect != sound_effect]) t1.join() # End the thread t2.join() GPIO.cleanup()